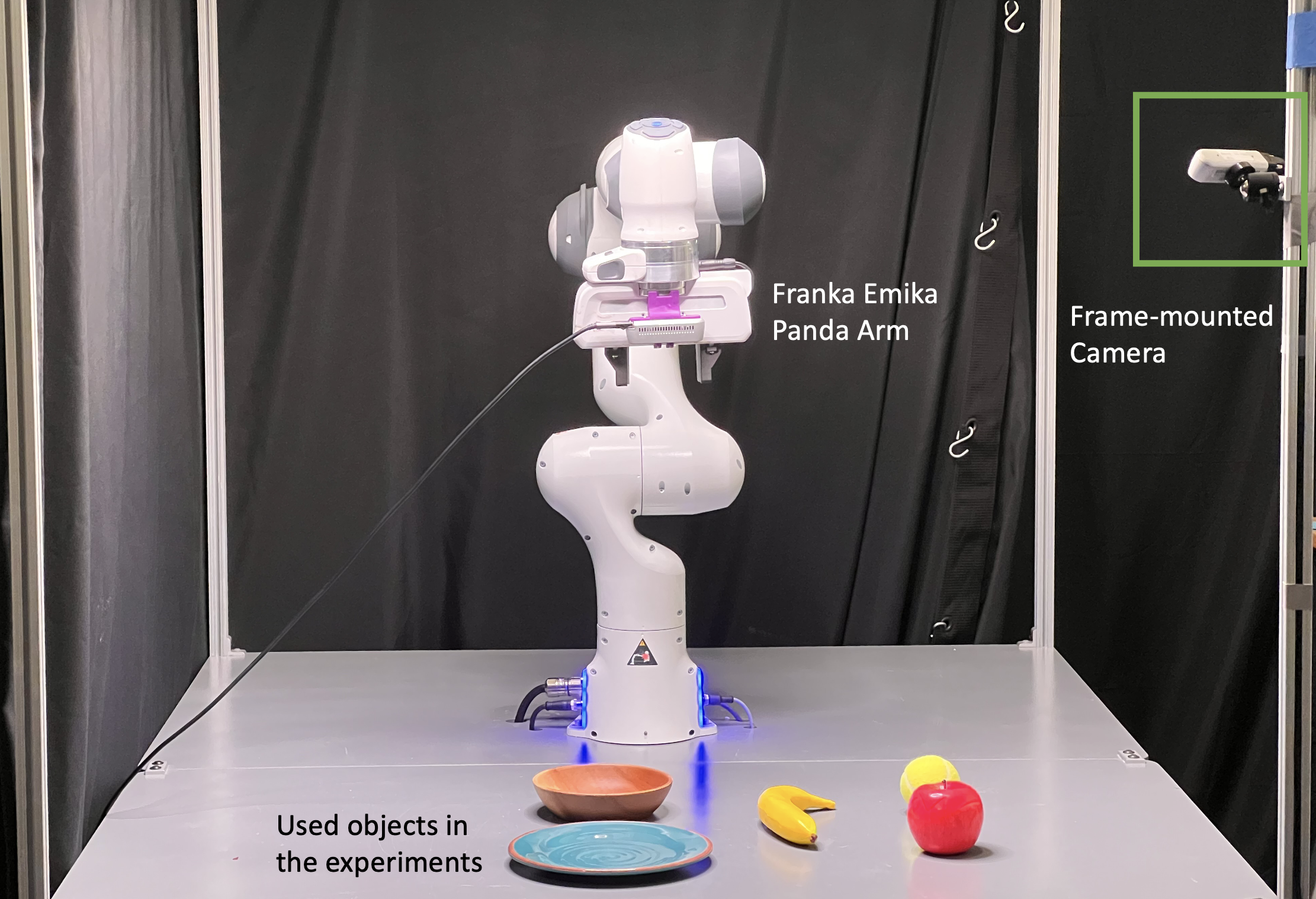

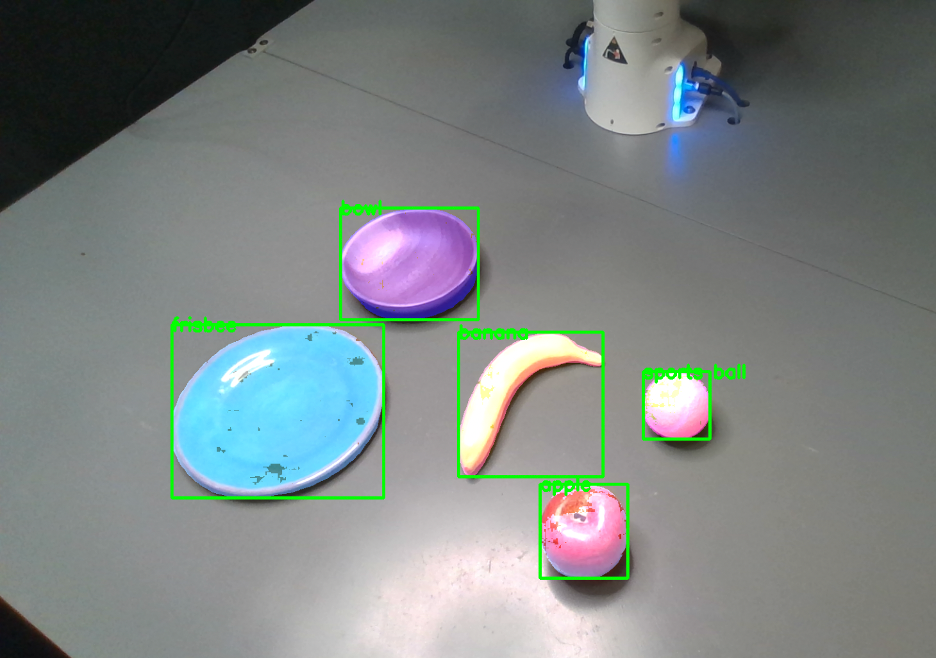

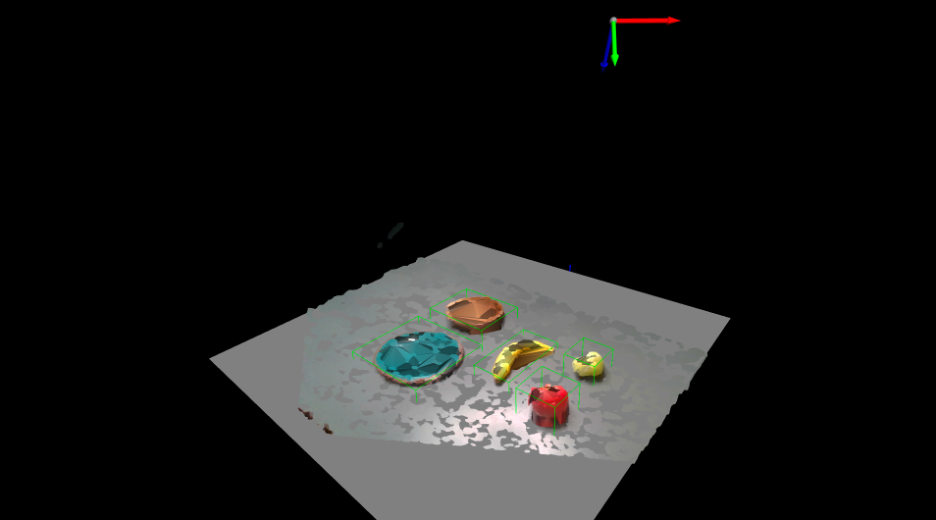

Our real-world set up contains a Franka Emika Panda robot arm with a parallel gripper, mounted on a table. We also have a Intel Realsense D435 camera mounted on the table frame, pointing 45 degrees down. Our vision pipeline contains six steps: first, based on the calibrated camera intrinsics and extrinsics, we reconstruct a partial point cloud for the scene. Second, we crop the scene to exclude volumes outside the table (e.g., background drops, etc.) Third, we use a RANSAC-based algorithm to estimate the table plane, and extract all object pointclouds on top of the table. Fourth, we use Mask-RCNN to detect all objects in the RGB space, and extract the corresponding point clouds. For objects that are not detected by the MaskRCNN, we first use DBScan to cluster their point clouds, and then run SegmentAnything model to extract their segmentations in 2D, and subsequently the point clouds. Finally, we perform object completion by projecting and extruding the bottom surface for all detected objects down to the table.

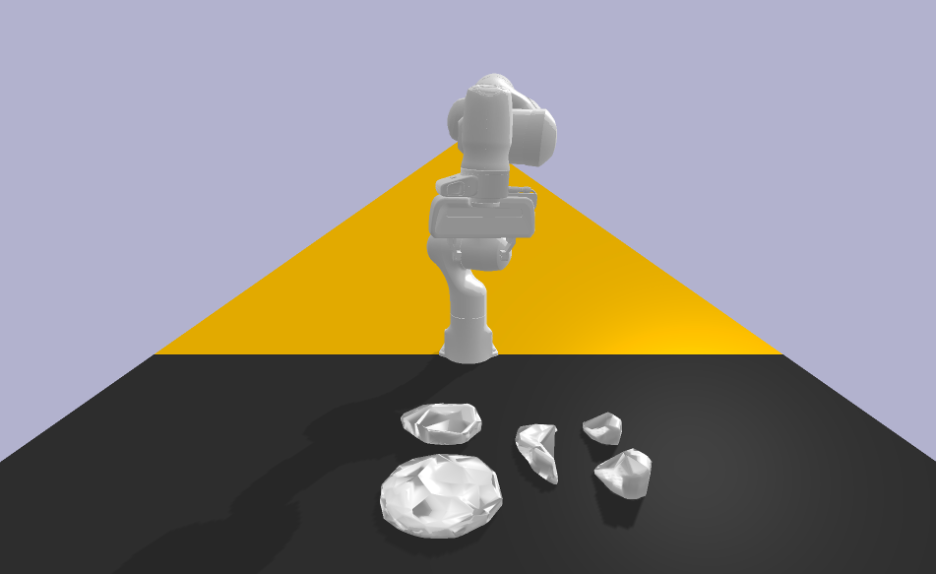

Next, we import the reconstructed object models into our physical simulator PyBullet, and directly deploy our planning algorithm with the learned mechanisms to compute plans. Since we use the same robot model in simulation, we can directly execute the planned robot trajectories in the real world. In practice, we execute in a closed loop manner. If the grasp fail (which can be detected by the gripper sensor), we move to arm to its neutral position and replan. After each placement action and pushing action, we use the vision pipeline to obtain an updated world state and replan.

Vision Pipeline Visualizations

Hardware setup.

Percieved 2D image, with overlaid object segmentation masks.

Segmented 3D objects and their reconstructed mesh.

Loaded objects in PyBullet Simulation.

Execution Examples

hook-and-grasp(banana, ball)

push-to-edge-and-grasp(plate)